May 14, 2024

Vertex AI

is Google’s development platform for building scalable generative AI applications, ensuring developers and enterprises benefit from Google’s highest quality standards for privacy, scalability, reliability, and performance.

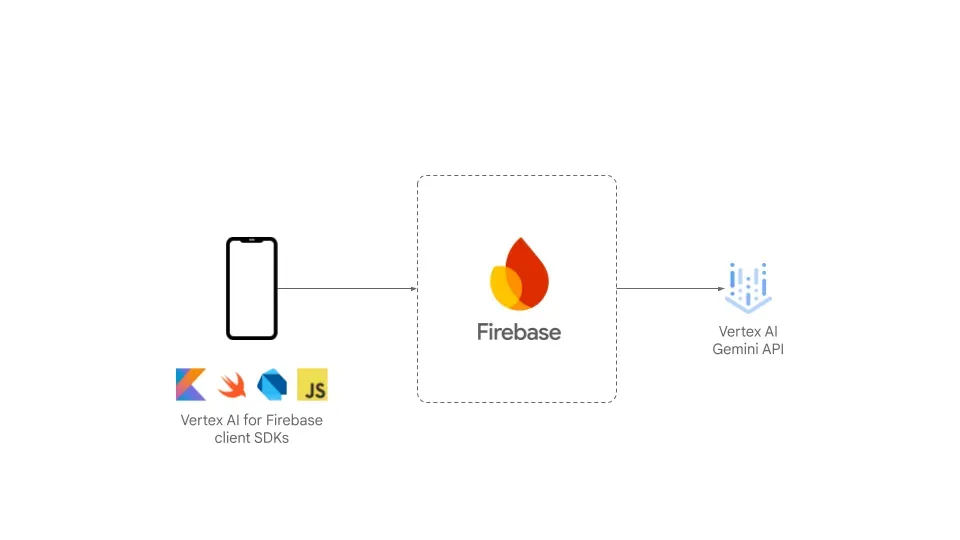

Up until now, using Vertex AI required you to set up a backend, using languages like Python, Java, Node.js, or Go, and then expose your AI features to your client apps via a service layer. Not all applications require or benefit from adding a service layer - many use cases can be implemented using a serverless paradigm.

Today,

we’re excited to announce Vertex AI for Firebase

, including a

suite of client SDKs for your favorite languages ? Swift, Kotlin, Java, Dart, and JavaScript

? bringing serverless to AI.

The Vertex AI for Firebase client SDKs enable you to harness the capabilities of the Gemini family of models directly from your mobile and web apps. You can now easily and securely run inference across all Gemini models and versions hosted by Vertex AI, including Gemini 1.5 Pro and 1.5 Flash.

Here’s how Vertex AI for Firebase makes developing AI features easier, better, and more secure for mobile and web developers:

- Effortless onboarding

- Access to the Vertex AI Gemini API from your client

- Use Firebase App Check to protect the Gemini API from unauthorized clients

- Streamline file uploads for multimodal AI prompts via Cloud Storage for Firebase

- Seamless model and prompt updates with Firebase Remote Config

Firebase magic

Effortless

onboarding

Onboarding to use the Vertex AI Gemini API through Firebase is seamless, feeling just like any other Firebase product.

The Firebase console’s new “Build with the Gemini API” page streamlines

getting started with the Vertex AI Gemini API

. A guided assistant simplifies enrollment in the pay-as-you-go plan, activating necessary Vertex AI services, and generating a Firebase configuration file for your app. And with those steps done, you’re just a few lines of code away from using Gemini models in your app.

Direct access to Gemini

Access

to the Vertex AI Gemini API

from your client

Firebase provides a gateway to the Vertex AI Gemini API, allowing you to use the Gemini models directly from your app via Kotlin / Java, Swift, Dart, and JavaScript SDKs.

These Firebase client SDKs give you granular control over the model’s behavior through

parameters

and

safety settings

that dictate response generation and prompting. And whether you’re crafting a

single interaction

or a multi-turn

conversation (like chat)

, you can guide the model with

system instructions

even before processing the user prompt. You can also generate text responses from diverse

multimodal

prompts, incorporating text, images, PDFs, videos, and audio.

Using system instructions

let

vertex

=

VertexAI

.

vertexAI

(

)

let

model

=

vertex

.

generativeModel

(

modelName

:

"gemini-1.5-pro-preview-0409"

,

systemInstruction

:

"You write inspirational, original, and impactful"

+

"quotes for posters that motivate people and offer insightful"

+

"perspectives on life."

)

guard

let

image

=

UIImage

(

...

)

else

{

fatalError

(

)

}

let

response

=

try

await

model

.

generateContent

(

image

,

"What quote should I put on this poster?"

)

if

let

text

=

response

.

text

{

print

(

text

)

}

For scenarios demanding immediate feedback, you can

stream

responses in real-time.

val

generativeModel

=

Firebase

.

vertexAI

.

generativeModel

(

"gemini-1.5-pro-preview-0409"

)

val

prompt

=

"Write a story about a magic backpack."

var

response

=

""

generativeModel

.

generateContentStream

(

prompt

)

.

collect

{

chunk

->

print

(

chunk

.

text

)

response

+=

chunk

.

text

}

To enable further processing, the Gemini API can provide JSON files. This allows the model to return structured data types that can be easily parsed into your objects. Additionally,

function calling

connects Gemini models to external systems, ensuring the generated content incorporates the most up-to-date information. You provide the model with function descriptions, and during interactions, it may request the execution of a function to enhance its understanding of your query, leading to more comprehensive and informed answers.

Protect against unauthorized access

Use

Firebase App Check

to protect the Gemini API from unauthorized clients

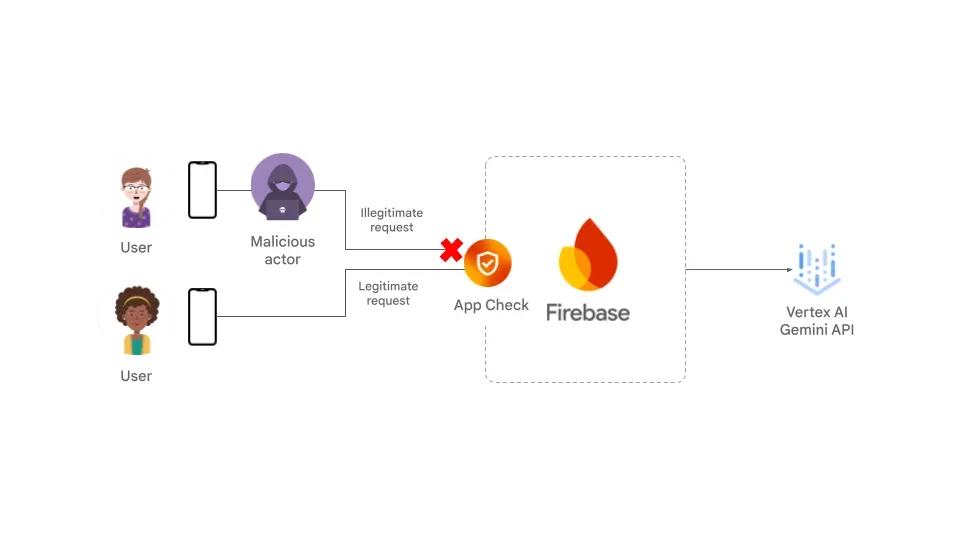

When apps run on user devices, protecting them from abuse becomes challenging. The unique code identifying users (API key or tokens) needs to be stored on the client-side, but these locations are not secure. Malicious actors can extract the key, leading to unexpected costs, data breaches, or quota overages, negatively impacting the user experience.

To solve this problem, Vertex AI for Firebase SDKs are integrated with

Firebase App Check

. App Check verifies the authenticity of each call to the Vertex AI Gemini API, ensuring that only legitimate requests from genuine apps and devices are processed. This proactive defense mechanism prevents unauthorized access and safeguards against potential abuse. This added layer of security empowers you to confidently deploy your mobile and web apps, knowing that your valuable assets are shielded from unauthorized access and potential harm.

Multimodal AI support

Streamline file uploads

via Cloud Storage for Firebase

Cloud Storage for Firebase offers an efficient and flexible approach for uploading files for use in your multimodal prompts ? especially in conditions with less reliable network conditions. Your app can directly upload user files (like images, videos, and PDFs) to a Cloud Storage bucket, and then you can easily reference these files in your multimodal prompts. Additionally, Firebase Security Rules provide granular control over file access, ensuring only authorized users can interact with the uploaded content.

const

firebaseConfig

=

{

}

;

const

firebaseApp

=

initializeApp

(

firebaseConfig

)

;

const

vertexAI

=

getVertexAI

(

firebaseApp

)

;

const

model

=

getGenerativeModel

(

vertexAI

,

{

model

:

"gemini-1.5-pro-preview-0409"

}

)

;

const

prompt

=

"What's in this picture?"

;

const

imagePart

=

{

fileData

:

{

mimeType

:

'image/jpeg'

,

fileUri

:

"gs://bucket-name/path/image.jpg"

}

}

;

const

result

=

await

model

.

generateContent

(

[

prompt

,

imagePart

]

)

;

console

.

log

(

result

.

response

.

text

(

)

)

;

Flexibility

Seamless

model and prompt updates

with Firebase Remote Config

Fine-tuning the right prompt for your specific use cases takes time and effort, often involving trial and error. Unexpected scenarios can arise, leading to unwanted user experiences, and you may need to update your prompts to keep things running smoothly. Additionally, with the rapid pace of innovation in AI models and features, new model versions are released multiple times per year. You want the flexibility to update the prompts and model versions in your apps without forcing users to download a full update.

Firebase Remote Config

is the perfect tool for this situation. It’s a cloud service that lets you adjust your app’s behavior on the fly, without requiring users to download an app update. With Remote Config, you set up default values within your app to manage your model and prompts. Then, you can use the Firebase console to change these defaults for all users, or target specific groups to experiment and conduct A/B testing.

Integrating with Remote Config

await

Firebase

.

initializeApp

(

)

;

final

remoteConfig

=

FirebaseRemoteConfig

.

instance

;

final

prompt

=

remoteConfig

.

getString

(

'promptText'

)

;

final

geminiModel

=

remoteConfig

.

getString

(

'geminiModel'

)

;

final

model

=

FirebaseVertexAI

.

instance

.

generativeModel

(

model

:

geminiModel

)

;

const

imagePart

=

{

fileData

:

{

mimeType

:

'image/jpeg'

,

fileUri

:

'gs://bucket-name/path/image.jpg'

}

}

;

const

result

=

await

model

.

generateContent

(

[

prompt

,

imagePart

]

)

;

print

(

result

.

response

.

text

(

)

)

;

Here’s where to get started:

- Begin building your vision now with our

public preview

- Be ready to release your app to production in the Fall this year when Vertex AI for Firebase plans its general availability release.

- Check out our quick starts and read our

documentation

Your feedback is invaluable. Please report bugs, request features, or contribute code directly to our Firebase SDKs’

repositories

. We also encourage you to participate in

Firebase’s UserVoice

to share your ideas and vote on existing ones.

We can’t wait to see what you build with Vertex AI for Firebase!